It's been a few days since the shooting at YouTube's headquarters in San Bruno,kermode mortuary eroticism California, but already a new level of shooter conspiracy theories has taken hold, including on YouTube's own platform.

It's an issue that's reared its head for YouTube in particular after a number of recent incidents, including the recent school shooting in Parkland, Florida, and the deadly mass shooting at a Las Vegas music festival. And now it's moved on to this weeks tragedy.

SEE ALSO: The YouTube algorithm is promoting Las Vegas conspiracy theoriesAlready, some conspiracy theorists are spreading the idea that the shooter, Nasim Aghdam, was a victim of free speech impairment. And, of course, there are accusations that Aghdam was a member of the "deep state."

But there's a quieter, far more disturbing theory proliferating on YouTube itself about Aghdam not even being real. While it hasn't pushed its way to the top of YouTube's trending like some of the Parkland "crisis actor" theories, it's garnered a little traction while also breathing new life into another issue entirely.

The video in question, "Y Does the Youtube Shooter Looks Like An A.I. Computer Program?" has only garnered around 86,000, views but it's still quite a weird one, suggesting that Aghdam is actually an AI creation.

It's... yeah.

The video's narrator draws attention to what she considers Aghdam's "stiffness" and compares it to an infamous AI-generated fake Barack Obama video.

While it's one of the most-viewed videos on the topic, it's hardly the only one.

And this is where a conspiracy can often turn dangerous, by including a kernel of truth. Because these face-swapped/fake AI videos -- called deepfakes -- are, indeed, a real thing and a real problem.

In case you somehow missed it, the rise of deepfake videos in recent months has dominated boards like Reddit and plagued sites like Pornhub where users have uploaded pornographic videos in which the faces of celebrities have seamlessly been stitched onto the actors.

But this kind of technology can be used for far more nefarious things than just porn videos. That fake video using Obama's image to say something he didn't actually say that the one conspiracy theorist used? It's real and it's terrifying.

And it's given these folks a basis of truth on which they can build what is otherwise an insane idea, an idea that also requires buy-in that the actual YouTube shooting was a false flag operation involving one of the largest tech companies in the world (Google, which owns YouTube).

YouTube and Google, have both faced harsh criticism for the way their algorithms push fake news (even on kids apps) and both have promised they're trying to take better control of this. YouTube even recently said they'd start utilizing Wikipedia information to help readers suss out what's real and what's not, though that's hardly an authoritative source.

But this week's shooting has directly affected YouTube (and Google) in a way none of the other previous incidents have, meaning there will likely be more attention given to it. While that's a good thing, these conspiracies evolve and become tougher to pin down. And that's going to take all the energy and resources these companies can muster.

Topics Artificial Intelligence

(Editor: {typename type="name"/})

Ruggable x Jonathan Adler launch: See the new designs

Ruggable x Jonathan Adler launch: See the new designs

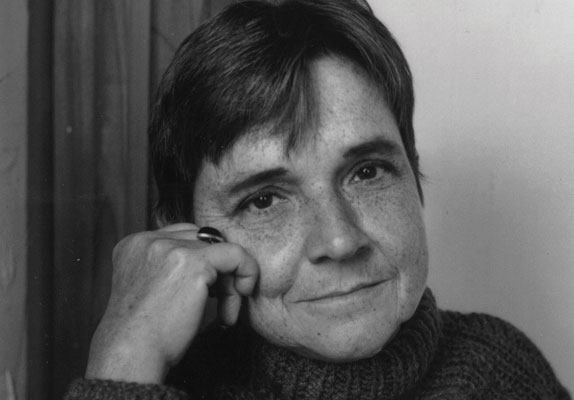

Adrienne Rich by Robyn Creswell

Adrienne Rich by Robyn Creswell

How to GIF YouTube videos in 10 simple steps

How to GIF YouTube videos in 10 simple steps

WBAI Celebrates Issue 200 by The Paris Review

WBAI Celebrates Issue 200 by The Paris Review

Greenpeace activists charged after unfurling 'Resist' banner at Trump Tower in Chicago

Activists protesting the Trump administration's rollback of U.S. environmental and climate policies

...[Details]

Activists protesting the Trump administration's rollback of U.S. environmental and climate policies

...[Details]

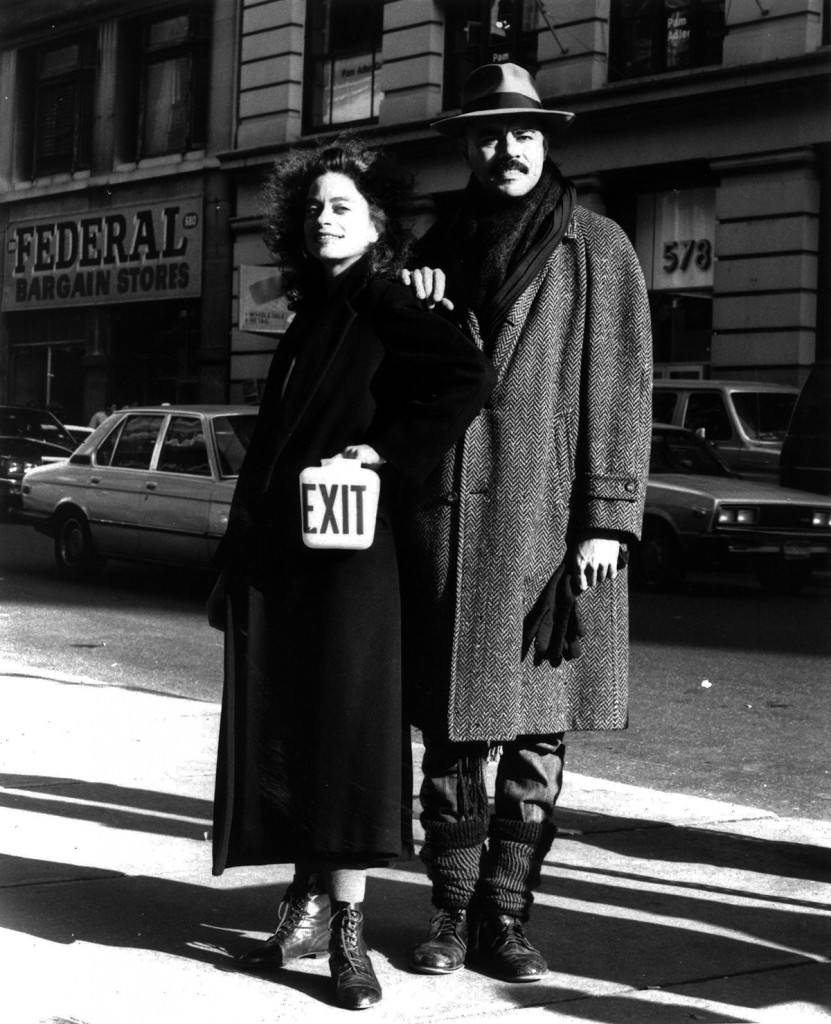

Exit Art, 1982–2012 by Hua Hsu

Exit Art, 1982–2012By Hua HsuApril 12, 2012Arts & CultureJeannette Ingberman and Papo Colo in fr

...[Details]

Exit Art, 1982–2012By Hua HsuApril 12, 2012Arts & CultureJeannette Ingberman and Papo Colo in fr

...[Details]

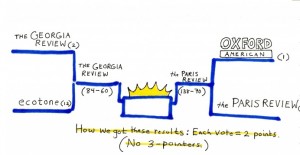

Vote for TPR in the Final! by Sadie Stein

Vote for TPR in the Final!By Sadie SteinApril 6, 2012BulletinThanks to our fan loyalty, we have made

...[Details]

Vote for TPR in the Final!By Sadie SteinApril 6, 2012BulletinThanks to our fan loyalty, we have made

...[Details]

Best audio workout apps for training without a screen

Since your gym is still closed and will be for a while, the world is now your workout space. But for

...[Details]

Since your gym is still closed and will be for a while, the world is now your workout space. But for

...[Details]

Elon Musk told Donald Trump what to do about the Paris Climate Agreement

The Trump administration may finally be nearing a decision on whether to stay in the Paris Climate A

...[Details]

The Trump administration may finally be nearing a decision on whether to stay in the Paris Climate A

...[Details]

Pig butchering romance scam: One victim out $450,000

Romance scams are unfortunately common. Swindlers gain people's trust by pretending to date them onl

...[Details]

Romance scams are unfortunately common. Swindlers gain people's trust by pretending to date them onl

...[Details]

Rejections, Slush, and Turkeys: Happy Monday! by Sadie Stein

Rejections, Slush, and Turkeys: Happy Monday!By Sadie SteinApril 16, 2012On the ShelfDora Saint, the

...[Details]

Rejections, Slush, and Turkeys: Happy Monday!By Sadie SteinApril 16, 2012On the ShelfDora Saint, the

...[Details]

Reading in New York; Reading of London by Lorin Stein

Reading in New York; Reading of LondonBy Lorin SteinApril 20, 2012Ask The Paris ReviewMy apartment i

...[Details]

Reading in New York; Reading of LondonBy Lorin SteinApril 20, 2012Ask The Paris ReviewMy apartment i

...[Details]

Sinner vs. Shelton 2025 livestream: Watch Australian Open for free

TL;DR:Live stream Sinner vs. Shelton in the 2025 Australian Open for free on 9Now. Access this free

...[Details]

TL;DR:Live stream Sinner vs. Shelton in the 2025 Australian Open for free on 9Now. Access this free

...[Details]

Wordle today: Here's the answer and hints for May 12

Can't get enough of Wordle? Try Mashable's free version now I

...[Details]

Can't get enough of Wordle? Try Mashable's free version now I

...[Details]

接受PR>=1、BR>=1,流量相当,内容相关类链接。